In the dynamic landscape of radar technology, the advent of software-defined radars has revolutionised the industry. This shift has allowed for the continuous enhancement of radar capabilities through over-the-air (OTA) updates. At the forefront of this evolution is Provizio, pioneering advancements in imaging radar perception. This white paper delves into the capabilities of our software defined radar systems by highlighting the improvements achieved to our perception stack through optimisation and fine-tuning in just a couple of days at CES 2024.

Traditionally, radar systems were static in their capabilities, limited by their hardware configurations. The emergance of software-defined radars has changed this paradigm, enabling continuous enhancements and updates to meet evolving requirements. In addition to using software-defined radar, our 5D Perception® system is built upon a sophisticated microservice architecture. Each microservice plays a crucial role in enhancing specific aspects of radar perception, and each can be updated independently, resulting in an agile and responsive system.

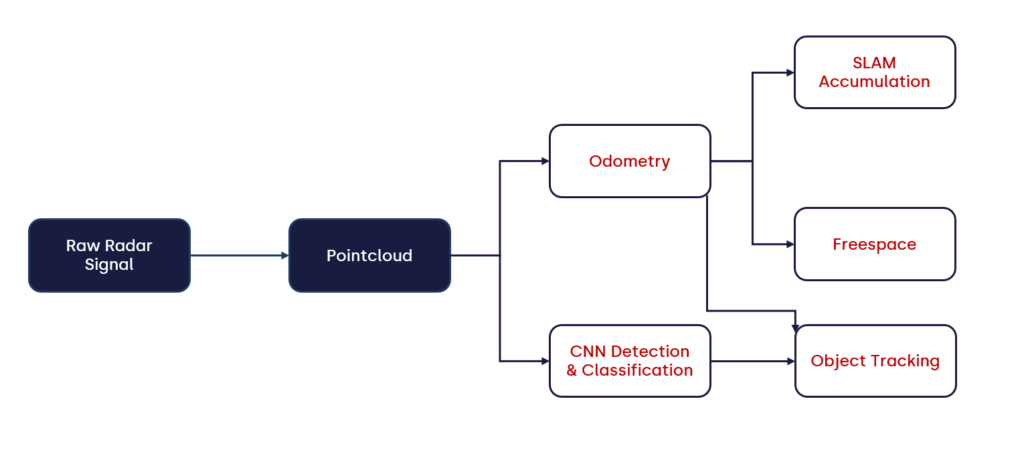

To simplify communication between different microservices, a system called DDS (Data Distribution Service) is used. DDS is a networking middleware that simplifies complex network programming by implementing a publish-subscribe pattern for sending and receiving data, events, and commands among the nodes. Publishers create topics such as odometry, classification, and freespace to publish data, and DDS then delivers the data to subscribers who declare an interest in those topics. Below is a description of some of the main perception topics implemented in our 5D Perception® system.

Utilising a state-of-the-art neural network optimised for Nvidia Orin, our 5D Perception® system excels in object detection, classification, and tracking. The microservice incorporates many steps, including:

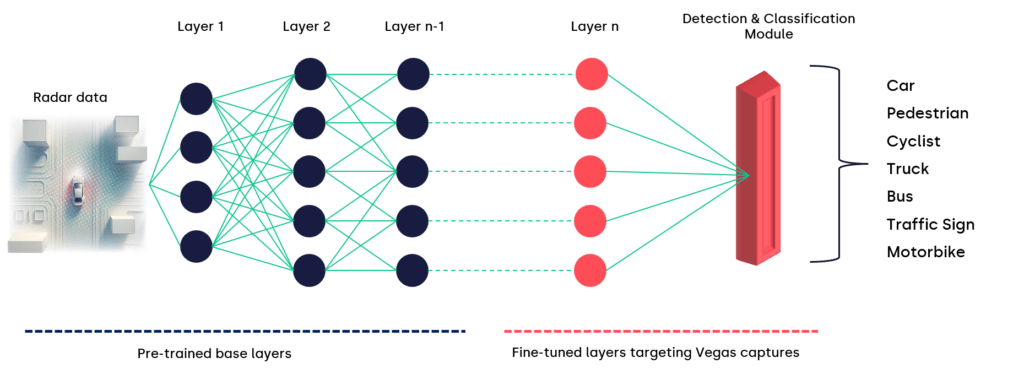

To optimise the detection and classification capabilities of our radar, we used our demo drives at CES to further fine-tune the neural network.

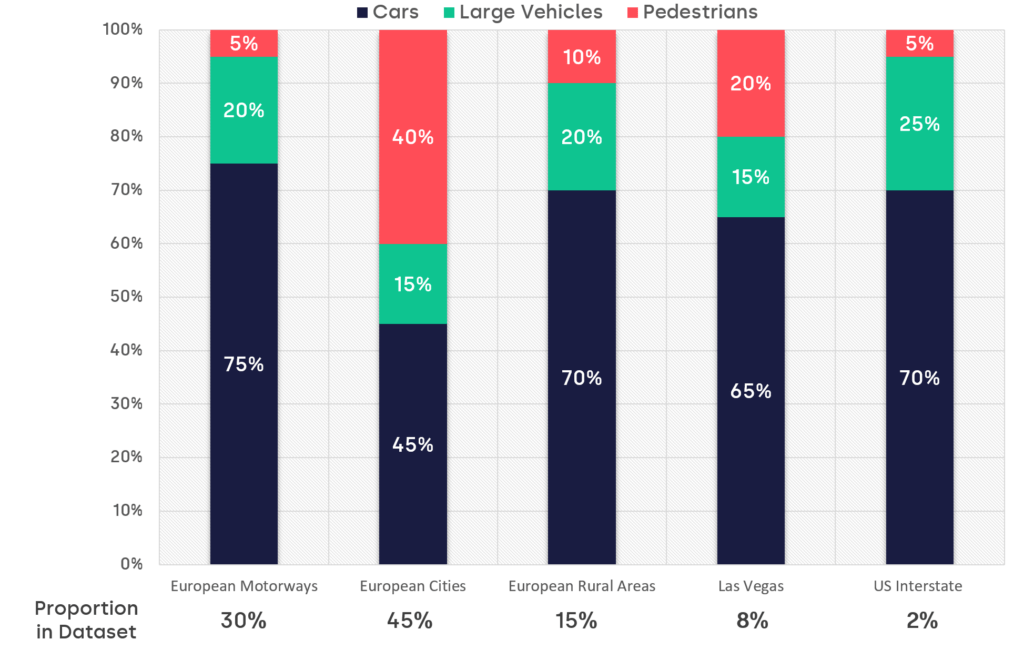

Our general dataset is made of a balanced distribution of captures taken in multiple environments, including large & small cities, motorways, countryside, car-parks, etc. from Europe and the US. At the time of CES 2024, we already had a Las Vegas dataset from our previous attendance at CES 2023, representing ~10% of this general dataset.

Using captures taken in Las Vegas during CES 2024, our engineers created a new dataset by combining 50% of the data from our general balanced dataset and 50% from new data captured in Las Vegas. Using our pre-trained general model covering various scenarios, a targeted fine-tuning session was initiated on our NVIDIA DGX A100 training computer.

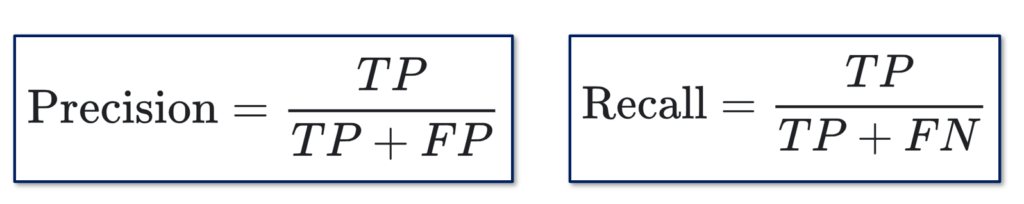

To benchmark the performance of the system, we used a capture of roads from Las Vegas (which had not been used for training the model), to validate that our model demonstrated improved performance. In this respect, two key metrics were used:

Note that precision is not as important as recall, as tracking largely tends to remove false positives due to the fact that an entity like a car is being tracked only after at least 2 frames of being detected. Thus, the false cars or pedestrians being detected during only 1 frame are not considered by our tracking microservice.

The benchmark is made of 750 labelled entities along 250 frames of pure Las Vegas streets and roads, representing both day and night-time lighting conditions.

These benchmarks revealed an increase of nearly 9% in precision and recall for cars and up to 6% in recall and 5% in precision for large-vehicles on the new fine-tuned CES model. While it may appear small, going from 0.715 to 0.778 in recall is a massive performance improvement for our model. In fact, the fine-tuned model achieved state-of-the-art performance in our internal radar detection and classification benchmark.

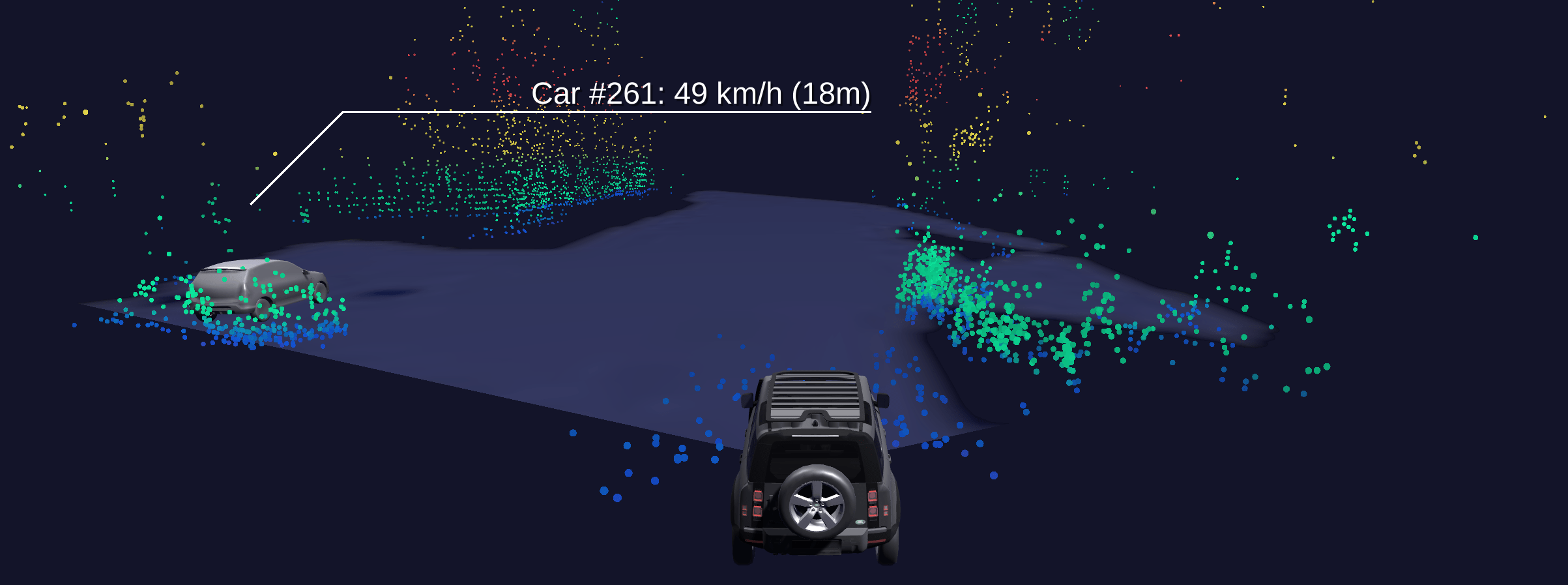

In the comparison below, we can see that cars are detected up to 20m further away (in medium range mode, < 100m) in the CES fine-tuned model, when compared with the previous model. This improvement also results in the system being able to evaluate the trajectory of vehicles on up to 10 more frames, producing 35% less false negatives.

Finally, the late-tracking system in the neural network was enhanced by making a slight adjustment to the tracking_buffer hyperparameter. It was discovered that increasing the buffer from 20 frames to 30 frames resulted in an improved stability of 45% in pedestrian tracking and 25% in cars and large-vehicle tracking. For context, the tracking stability is defined by how much time an object is detected compared to its actual appearance in the field of view of the radar. This change allows detected objects to reappear in the radar field of view after up to 30 frames, which is equivalent to 3 seconds for a radar operating at 10Hz.

The Freespace microservice is dedicated to identifying open driving areas on the radar. Using only the point cloud data, this service seeks out the location of kerbs and pavements, as well as highlighting unidentified objects in the middle of the road, in order to give meaningful insight to achieve local freespace pathfinding. Amazingly, the efficiency of the system allows us to achieve this at a rate of 20 FPS, without the need for a powerful in-cab server.

As we are constantly improving the microservice, the new captures taken in Vegas allowed us to publish a version that accounts better for variations in infrastructure (e.g., different road sizes and layouts) between Europe and the USA, resulting in a more versatile and robust system. Since the Freespace microservice relies primarily on data from point-cloud clustering, ransac road boundaries estimation and odometry, the fine-tuning process consisted of finding the perfect balance between raising the clustering threshold while maintaining reliable road boundaries estimation.

As a result of these optimisations, the medium range (100m) freespace detection distance for Las-Vegas boulevards and large interstate roads went from an average maximum range of 53m to an optimised maximum range of 86m. This is a 62% increase in average maximum range for the detection of freespace - day and night, in all weather conditions.

By leveraging the power of software-defined radars and OTA updates, we are able to perform near real-time optimisation of our perception system to provide higher performance in a variety of areas - from classification & tracking, to radar-based odometry and freespace detection.

Using a refined technique and measurements taken over only a few days, our system demonstrated:

Furthermore, these updates were delivered rapidly through the use of an in-house data processing pipeline, which uploaded the latest captures to the cloud, extracted and pre-processed the data, and then used the data for fine-tuning of our neural network models. In this way, our models could be seamlessly optimised overnight, delivering improved performance for the next day of captures and demos.

This is just a glimpse of the future of radar technology, where software systems working on-the-edge are constantly learning and adapting. We believe that making the transition to scalable L3+ a reality is a challenge that can be solved by continuously learning and improving our solution, enabled by technologies such as those outlined in this paper.

Provizio, Future Mobility Campus Ireland

Shannon Free Zone

V14WV82

Ireland

Newlab Michigan Central,

Detroit,

MI 48216,

United States